ML/AI Tailored to Science

Machine Learning (ML) and Artificial Intelligence (AI) have become core skills for data-driven research. With the emergence of foundation models—trained on massive datasets and adaptable to different domains—researchers and developers alike can now solve problems that were once too complex or resource-intensive.

Unified, Scalable and Reproducible Workflows

CyVerse provides the infrastructure to easily integrate cutting-edge ML/AI tools into research workflows. Through the Discovery Environment, users can access familiar workbenches like Jupyter and RStudio, as well as rapid development tools like Gradio and Streamlit.

A robust data management system is at the core is the CyVerse Data Store, it integrates with DVC and MLFlow to support reproducibility, dataset versioning, and team collaboration.

Tap into specialized hardware as a researchers, this include HPC clusters, academic GPU-enabled clouds, NSF ACCESS resources, and commercial cloud platforms. Tools like CyVerse CACAO automate cloud workflows, making it easier to scale and reproduce ML/AI analyses across platforms.

Discover What You Can Do with CyVerse

Give your team the pragmatic CyVerse advantage: CyVerse can help you incorporate the ML/AI components you need for your next proposal, grant, coursework, or workshop, and offers architectural guidance and customization services to cater to the unique requirements of your ML/AI analysis workflows.

- Learn more of Cyverse

- webinars around ML/AI

- UArizona Data Lab's webinars on ML/AI topics

- Wiki and Workshops for Machine Learning.

Featured Projects

Large Language Models (LLMs) like ChatGPT are transforming education and research. With intuitive interfaces, these tools help students learn more efficiently, support instructors in creating content, and assist researchers in summarizing complex information.

- But they’re not perfect. LLMs can sometimes "hallucinate"—producing inaccurate or fabricated information, especially when asked about topics outside their training data.

- To improve reliability, methods like Retrieval-Augmented Generation (RAG) are used. This allows LLMs to answer questions based on specific data or documents supplied by the user, ensuring more accurate and grounded results.

Learn

Prompt Engineering

Prompt engineering is the crafting and refining of prompts or input data to generate desired outputs from AI models. Crafting prompts strategically helps guide AI models like ChatGPT to produce more accurate and useful responses. We're passionate about teaching prompt engineering to others, showing how to shape input to get the best AI responses.

Deep Learning

Deep learning is a subset of ML where algorithms learn to imitate the human brain's neural networks to recognize patterns and make decisions. It's the technology behind many AI advancements, from image and speech recognition to natural language processing. For a introduction topic, check out past Cyverse webinars:

A Deep Dive into Deep Learning Techniques: A First-of-its-kind Hands-on Workshop

The UArizona Data Lab, under the Data Science Institute and in partnership with the Institute for Computation & Data-Enabled Insight, acts as a dynamic hub for promoting interdisciplinary research in data science. It provides a collaborative space where scholars and learners from various fields collaborate to investigate, interpret, and derive insights from intricate datasets. Through interdisciplinary workshops, consultations, and a suite of tools and resources, the UArizona Data Lab enables researchers, students, and industry collaborators to leverage the power of data-driven exploration.

UARIZONA DATA LAB ML/AI WEBINAR PLAYLIST

MACHINE LEARNING WORKSHOPS WIKI

Use Cases

The iNaturalist Open Download tool created an impact by providing easy access to millions of labeled, crowd-sourced images of living organisms.

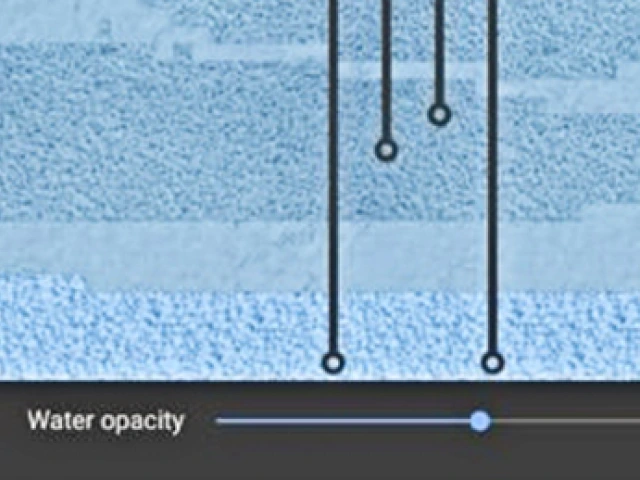

By leveraging container technology, Condon's team overcomes barriers in hydrology research, making high-level models more accessible to researchers, educators, and policymakers.

Researchers applying and integrating layers of machine learning to program robotics with the goal of replacing farmers’ reliance on heavy machinery and broadcast spraying.